Dell XPS 13 Developer Edition – Ultrabook with Ubuntu preinstalled

Introduction

In late 2010, as I was starting a new job, I bought a new laptop, a Samsung QX410, based largely on this review.

From the beginning I was quite happy with that laptop: the screen is decent, the keyboard spacious and comfortable, battery life is OK, and in retrospective it’s a solidly-built laptop that stood up to 2.5 years of heavy daily use.

However, eventually the time came for it to die, so in late 2013 I had to start looking for replacements. Since I still needed to work, I borrowed a Lenovo Thinkpad T520 to use temporarily. Spec-wise it was similar to the Samsung, save for the larger 15″ screen and the thing that really spoiled me, the excellent Lenovo laptop keyboard. Alas, this appears to have been lost in the latest generation; I had a chance to try a Thinkpad X240 (one of the candidates for replacing the Samsung) and found the island-style keyboard odd and uncomfortable.

At the same time I was able to use a Dell XPS 13 for a few days, and the keyboard on that little machine felt extremely comfortable and close to the Samsung’s (which I’m already used to). So the XPS 13 immediately moved to the top of the list. In early 2014 I finally pulled the trigger and got the Developer Edition XPS 13. The Developer Edition is the result of the “Sputnik Project” and has been available for all previous XPS 13 generations. Here are my impressions of it.

The XPS 13 is somewhat of a MacBook Air lookalike with the same slim wedgy shape. While the footprint is a bit smaller than the 13-inch Air, they look very similar from a top view. The XPS’s top lid and frame are machined aluminum, though the similarity ends there, as the keyboard and deck are coated with black soft-touch rubber, and the bottom is black carbon fiber. The XPS is different enough to qualify as “inspired by”, rather than “knockoff of”.

Incidentally, the MacBook air was also on my list of candidate laptops. The XPS 13 beat it for a couple of reasons. The screen is higher-resolution and touch-enabled, it’s Ubuntu-certified and available with Ubuntu preinstalled, and it has a PC keyboard. Apple keyboards are great but I just can’t get used to the ctrl-alt-cmd layout and always keep hitting the wrong keys. Also, for a comparable configuration, cost was similar. So in the end the XPS 13 won.

Chassis

The XPS 13 is really thin, as befits a 13-inch ultrabook, and has the typical front taper to a 6-mm thinness.

The thing weighs about 1.4 kg. Since it’s so tiny it actually feels heavy for the size, but it’s by no means heavy. Carry it in a backpack and you’ll barely feel it’s there. Quite a difference from the 2.25-kg Samsung and the 2.5-kg Thinkpad T520, which looks and feels like a behemoth next to the XPS (A nostalgia shot of those laptops next to the XPS is at the end of this post).

To give an idea of the XPS’s dimensions I made a quick photo shoot next to some comparable pre-ultrabook ultraportables.

Top row shows a Dell Vostro V13 and an Acer Aspire One netbook (11″ screen). Bottom row includes the XPS 13 and the legendary Thinkpad X201 with 12″ screen. Despite having a 13″ screen, the XPS is smaller than the Thinkpad X201 and the V13. Footprint-wise, the screen is the limiting factor, so for a 13″-class system it’s reasonable to not see a huge difference.

The side view is where the XPS 13’s thinness becomes evident. The XPS 13 is on top, above the X201 and with the V13 at the very bottom.

The V13 was a tremendously thin machine for its generation, which shows in the fact that it’s almost as thin as the XPS 13’s rear. Of course, the XPS 13’s taper at the front is goes on to be about as thin as the V13’s screen. The V13 made performance compromises to fit in such a svelte chassis, but in all honesty the XPS 13 is also not a speed demon and also has fewer ports and expandability than other bulkier options.

The X201 isn’t even in the same league here; comparing the thickest parts (rear end) it’s almost 3 times thicker than the XPS 13. The X201 is still quite light, about 200g heavier than the X13, the chassis is incredibly sturdy (XPS 13 doesn’t feel flimsy at all but I’d hesitate to put it through the kind of abuse a Thinkpad is known to just shrug off), and it has a lot more expandability, in the form of more USB ports, an expresscard slot, a media card reader, ethernet and modem ports, and incredibly, a full VGA connector.

By comparison and list of ports, the XPS 13 only has a combo audio jack, 2 USB 3.0 ports, and a mini-DP port for external video. This is the price one pays for ultraportability…

In the following paragraphs I posted pictures of the XPS 13, open, next to the V13 and the X201. The keyboard looks minimalistic in comparison but it’s quite comfortable to use. The V13’s is not as nice, while the X201 has that fantastic Lenovo keyboard.

Keyboard and touchpad

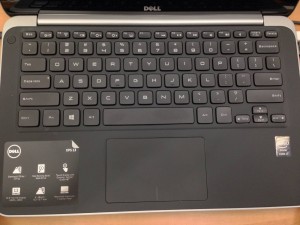

The XPS 13’s keyboard has no extraneous dedicated keys, other than the power and mute buttons; everything else is handled by the standard keys, with F-keys doubling as special-function keys to switch monitors, control wireless, show battery information, control volume and brightness as well as keyboard backlight, and perform media control functions.

The XPS 13’s keyboard has no extraneous dedicated keys, other than the power and mute buttons; everything else is handled by the standard keys, with F-keys doubling as special-function keys to switch monitors, control wireless, show battery information, control volume and brightness as well as keyboard backlight, and perform media control functions.

All standard keys are standard-sized and in their proper positions. Exceptions are the half-size F-keys, including insert and delete, and the cursor keys. One thing I don’t like is lack of dedicated page-up and page-down keys; these are handled (along with home and end) by the cursor keys in combination with Fn. I use pg-up and pg-down extensively to switch tabs in Firefox and this is really a sore point for me. But that’s about the only tradeoff this excellent keyboard makes.

The touchpad seems to be a synaptics model, one of those “buttonless” trackpads, although it does have distinct clicklable sections at the bottom. Unlike the Samsung’s touchpad which was very troublesome and only worked in tap-to-click mode, this touchpad’s clickable buttons also work perfectly, so you’re free to click or two-finger-tap anywhere, or use the “discrete” buttons if you like, which makes things like dragging much easier.

A backlit keyboard is nice to have, but when the light is on, an annoying high-pitched whine comes from underneath the keyboard. This problem has been reported to Dell by many users and is still awaiting a fix or response. As a result, I usually keep the backlight off.

Screen

Both the laptops I’ve been using lately had non-IPS panels with industry-standard resolutions for the time. 1366×768 for the Samsung is pretty typical. One gripe I had is that the Samsung’s screen was “protected” by a glossy sheet of cheap plastic that with time became very scratched and made the screen harder to see. This can be seen in the picture at the end of this post.

The XPS 13’s screen is stunning by comparison, if only because it’s a much newer panel. Viewing angles are amazing, the 1920×1080 resolution is razor-sharp and crisp (and even a bit too high for the screen size), and the backlight is strong enough to overcome the gorilla glass cover’s gloss. At least I expect it won’t get scratched easily. This is a Synaptics touchscreen which has worked very well with Ubuntu, although I haven’t used it all that much because it feels very alien to my workflow.

Performance and battery life

I’m ill-equipped to provide an assessment here, as the jump from the pre-Sandy Bridge Samsung to the Haswell XPS 13 (plus a bump from i5 to i7 CPU) is so enormous that this machine just feels like it flies. One thing worth mentioning is that, while the Samsung had a standard mobile CPU (i5 480M with 3M cache and 35W TDP), the XPS 13 has the aforementioned, newer i7 with 4 MB cache, but in a low-TDP (only 15W), ULV variant. So by its ultraportable nature it’s on the lower side of the specs spectrum, however the generational advantage plus i7-ness really do make a difference and the system is snappy at all times.

Perhaps the biggest leap forward is the LiteOn SSD. While not hooked up directly to PCIe like a MacBook’s, this mSATA drive is absurdly fast in comparison to what I’d been using, resulting in a 3-second boot time (even with disk encryption), way faster than the 20-30 seconds I was getting on the Samsung.

Under moderate load (a few terminal windows open where I’m typing stuff, plus a browser with a some tabs, one of which is playing a Youtube video), the XPS 13 reports a battery lifetime of about 6 hours. For comparison, the Samsung lasted about 3 hours with a comparable workload on a 66Wh battery.

Given a mostly-idle workload (browser with static content plus a few terminal windows), the XPS 13 reports about 8 hours of battery life.

Software

Perhaps the nicest thing about the XPS 13 is that it’s certified for Ubuntu, and the Developer Edition I got comes preinstalled with Ubuntu 12.04, augmented by some OEM-specific tweaks to ensure all the hardware works correctly. Indeed everything works out of the box, and the first-boot experience is very smooth and polished, definitely less cumbersome than booting a Windows machine for the first time.

In case it’s needed, a utility to create a recovery disk is provided. I created a USB stick which can be used to quickly restore the machine to factory status. I then proceeded to erase the preinstalled Ubuntu version and install the latest development release (which will be released as Ubuntu 14.04). Don’t get me wrong, the preinstall is perfectly usable for 99% of people as it has a typical Ubuntu installation with all the usual tools, receives security and browser upgrades until 2017, and even includes a plethora of cloud software development tools such as Juju and Virtualbox (this is why it’s called a “Developer edition” and is focused on cloud development). However, because of my work, I really wanted to have the newest possible Ubuntu version. An ulterior motive was to verify whether the OEM-specific tweaks in the preinstalled version were “upstreamed” and made available in subsequent Ubuntu versions. This is a policy for the Ubuntu certification program; whenever possible, the work done when enabling a new machine is made available in the following stock Ubuntu release.

With a couple of exceptions, everything continued to work just as it did with the preinstalled version, and I was able to recreate a working environment complete with a transfer of my backup in only a few minutes. The fast SSD and USB3.0 transfers from my backup drive are partly to thank for this.

As exceptions, the touchpad didn’t get recognized and required blacklisting an i2c-hid module; and I lost the media control keys (which I seldom use, so I haven’t bothered to re-enable them).

This makes it a great alternative for regions where the Developer Edition is unavailable; just procure the Windows version of this laptop and installing the latest Ubuntu will result in a working system.

Here’s a quick overview of the things I didn’t like about the XPS 13. Of course, none of them were deal-breakers for me, but I wanted to sum them up to highlight the fact that yes, it’s not a perfect machine.

- Whining electrical noise (a defect, so once Dell confirms this is fixable I’ll apply for warranty service).

- Lack of dedicated pgup/pgdn keys.

- Screen a bit too glossy.

- a dearth of ports.

- Screen resolution too high for my poor, tired eyes (I’m half-kidding with this one).

To sum up, despite the above, I very highly recommend the XPS 13 Developer Edition. In addition to the sleek and solid hardware, you get Ubuntu preinstalled which will cover 99% of people’s needs, a system which benefits from the upstreamed enablement work resulting in an excellent platform to run the latest Ubuntu, *and* you send the message that Ubuntu preinstalls are desired by users, all while freeing yourself from the Microsoft tax which has plagued Linux laptop users for so long.